下载官方helm chart

git clone https://github.com/elastic/helm-charts.git 创建命名空间 kubectl create namespace efk

一 、 部署配置elasticsearch

cd helm-charts

vim elasticsearch/values.yaml

注意修改添加中文注释的地方。

---

clusterName: "elasticsearch"

nodeGroup: "master"

# The service that non master groups will try to connect to when joining the cluster

# This should be set to clusterName + "-" + nodeGroup for your master group

masterService: ""

# sticsearchElasticsearch roles that will be applied to this nodeGroup

# These will be set as environment variables. E.g. node.master=true

roles:

master: "true"

ingest: "true"

data: "true"

replicas: 3

minimumMasterNodes: 2

esMajorVersion: ""

# Allows you to add any config files in /usr/share/elasticsearch/config/

# such as elasticsearch.yml and log4j2.properties

esConfig: {}

# elasticsearch.yml: |

# key:

# nestedkey: value

# log4j2.properties: |

# key = value

# Extra environment variables to append to this nodeGroup

# This will be appended to the current 'env:' key. You can use any of the kubernetes env

# syntax here

extraEnvs: []

# - name: MY_ENVIRONMENT_VAR

# value: the_value_goes_here

# Allows you to load environment variables from kubernetes secret or config map

envFrom: []

# - secretRef:

# name: env-secret

# - configMapRef:

# name: config-map

# A list of secrets and their paths to mount inside the pod

# This is useful for mounting certificates for security and for mounting

# the X-Pack license

secretMounts: []

# - name: elastic-certificates

# secretName: elastic-certificates

# path: /usr/share/elasticsearch/config/certs

# defaultMode: 0755

# 国外镜像下载慢,修改为阿里云镜像(公开镜像,可设置下面地址。)

image: "registry.cn-hangzhou.aliyuncs.com/wang_feng/elasticsearch-oss"

imageTag: "7.9.0"

imagePullPolicy: "IfNotPresent"

podAnnotations: {}

# iam.amazonaws.com/role: es-cluster

# additionals labels

labels: {}

esJavaOpts: "-Xmx1g -Xms1g"

resources:

requests:

cpu: "1000m"

memory: "2Gi"

limits:

cpu: "1000m"

memory: "2Gi"

initResources: {}

# limits:

# cpu: "25m"

# # memory: "128Mi"

# requests:

# cpu: "25m"

# memory: "128Mi"

sidecarResources: {}

# limits:

# cpu: "25m"

# # memory: "128Mi"

# requests:

# cpu: "25m"

# memory: "128Mi"

networkHost: "0.0.0.0"

volumeClaimTemplate:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "managed-nfs-storage" #使用存储类,如何创建存储类见文章末尾

resources:

requests:

storage: 5Gi #使用存储大小,本文测试环境设置小点。

rbac:

create: false

serviceAccountAnnotations: {}

serviceAccountName: ""

podSecurityPolicy:

create: false

name: ""

spec:

privileged: true

fsGroup:

rule: RunAsAny

runAsUser:

rule: RunAsAny

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

volumes:

- secret

- configMap

- persistentVolumeClaim

persistence:

enabled: true

labels:

# Add default labels for the volumeClaimTemplate fo the StatefulSet

enabled: false

annotations: {}

extraVolumes: []

# - name: extras

# emptyDir: {}

extraVolumeMounts: []

# - name: extras

# mountPath: /usr/share/extras

# readOnly: true

extraContainers: []

# - name: do-something

# image: busybox

# command: ['do', 'something']

extraInitContainers: []

# - name: do-something

# image: busybox

# command: ['do', 'something']

# This is the PriorityClass settings as defined in

# https://kubernetes.io/docs/concepts/configuration/pod-priority-preemption/#priorityclass

priorityClassName: ""

# By default this will make sure two pods don't end up on the same node

# Changing this to a region would allow you to spread pods across regions

antiAffinityTopologyKey: "kubernetes.io/hostname"

# Hard means that by default pods will only be scheduled if there are enough nodes for them

# and that they will never end up on the same node. Setting this to soft will do this "best effort"

antiAffinity: "hard"

# This is the node affinity settings as defined in

# https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#node-affinity-beta-feature

nodeAffinity: {}

# The default is to deploy all pods serially. By setting this to parallel all pods are started at

# the same time when bootstrapping the cluster

podManagementPolicy: "Parallel"

# The environment variables injected by service links are not used, but can lead to slow Elasticsearch boot times when

# there are many services in the current namespace.

# If you experience slow pod startups you probably want to set this to false.

enableServiceLinks: true

protocol: http

httpPort: 9200

transportPort: 9300

service:

labels: {}

labelsHeadless: {}

type: ClusterIP

nodePort: ""

annotations: {}

httpPortName: http

transportPortName: transport

loadBalancerIP: ""

loadBalancerSourceRanges: []

externalTrafficPolicy: ""

updateStrategy: RollingUpdate

# This is the max unavailable setting for the pod disruption budget

# The default value of 1 will make sure that kubernetes won't allow more than 1

# of your pods to be unavailable during maintenance

maxUnavailable: 1

podSecurityContext:

fsGroup: 1000

runAsUser: 1000

securityContext:

capabilities:

drop:

- ALL

# readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

# How long to wait for elasticsearch to stop gracefully

terminationGracePeriod: 120

sysctlVmMaxMapCount: 262144

readinessProbe:

failureThreshold: 3

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 3

timeoutSeconds: 5

# https://www.elastic.co/guide/en/elasticsearch/reference/current/cluster-health.html#request-params wait_for_status

#修改设置集群健康检查

#clusterHealthCheckParams: "wait_for_status=green&timeout=50s"

clusterHealthCheckParams: "wait_for_status=yellow&timeout=1s"

## Use an alternate scheduler.

## ref: https://kubernetes.io/docs/tasks/administer-cluster/configure-multiple-schedulers/

##

schedulerName: ""

imagePullSecrets: []

nodeSelector: {}

tolerations: []

# Enabling this will publically expose your Elasticsearch instance.

# Only enable this if you have security enabled on your cluster

ingress:

enabled: false

annotations: {}

# kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: "true"

path: /

hosts:

- chart-example.local

tls: []

# - secretName: chart-example-tls

# hosts:

# - chart-example.local

nameOverride: ""

fullnameOverride: ""

# https://github.com/elastic/helm-charts/issues/63

masterTerminationFix: false

lifecycle: {}

# preStop:

# exec:

# command: ["/bin/sh", "-c", "echo Hello from the postStart handler > /usr/share/message"]

# postStart:

# exec:

# command:

# - bash

# - -c

# - |

# #!/bin/bash

# # Add a template to adjust number of shards/replicas

# TEMPLATE_NAME=my_template

# INDEX_PATTERN="logstash-*"

# SHARD_COUNT=8

# REPLICA_COUNT=1

# ES_URL=http://localhost:9200

# while [[ "$(curl -s -o /dev/null -w '%{http_code}\n' $ES_URL)" != "200" ]]; do sleep 1; done

# curl -XPUT "$ES_URL/_template/$TEMPLATE_NAME" -H 'Content-Type: application/json' -d'{"index_patterns":['\""$INDEX_PATTERN"\"'],"settings":{"number_of_shards":'$SHARD_COUNT',"number_of_replicas":'$REPLICA_COUNT'}}'

sysctlInitContainer:

enabled: true

keystore: []

# Deprecated

# please use the above podSecurityContext.fsGroup instead

fsGroup: ""

helm 安装elasticsearch

helm install -n efk elasticsearch elasticsearch/

二、 部署配置filebeat

vim filebeat/values.yaml

注意修改中文注释地方

---

# Allows you to add any config files in /usr/share/filebeat

# such as filebeat.yml

#配置filebeat配置文件,指定读取日志位置,定义输出elasticsearch index

filebeatConfig:

filebeat.yml: |

filebeat.inputs:

- type: container #类型容器

paths:

- /var/log/containers/*.log #日志位置

processors:

- add_kubernetes_metadata:

host: ${NODE_NAME}

matchers:

- logs_path:

logs_path: "/var/log/containers/"

- type: container

paths:

- /var/lib/docker/containers/*/*.log

fields:

type: "kubernetes.container.name" #自定义索引类型

processors:

- add_kubernetes_metadata:

host: ${NODE_NAME}

matchers:

- logs_path:

logs_path: "/var/lib/docker/containers/"

output.elasticsearch: #输出日志到elasticsearch

host: '${NODE_NAME}'

hosts: '${ELASTICSEARCH_HOSTS:elasticsearch-master:9200}'

indices:

- index: "container-name-%{+yyyy.MM.dd}" #自定义索引名称(按日期一天一个)

when.equals:

fields.type: "kubernetes.container.name"

# Extra environment variables to append to the DaemonSet pod spec.

# This will be appended to the current 'env:' key. You can use any of the kubernetes env

# syntax here

extraEnvs: #定义语言环境

- name: LANG

value: en_US.UTF-8

extraVolumeMounts: #挂载目录

- name: shoplog

mountPath: /shop_data/logs

readOnly: true

- name: sysdate

mountPath: /etc/localtime

extraVolumes: #挂载本地文件目录

- name: shoplog

hostPath:

path: /data/k8s/nas-e898efc3-324d-41d2-938a-bf02eb80e098/shop_data/logs

- name: sysdate

hostPath:

path: /etc/localtime

extraContainers: ""

# - name: dummy-init

# image: busybox

# command: ['echo', 'hey']

extraInitContainers: []

# - name: dummy-init

# image: busybox

# command: ['echo', 'hey']

envFrom: []

# - configMapRef:

# name: configmap-name

# Root directory where Filebeat will write data to in order to persist registry data across pod restarts (file position and other metadata).

hostPathRoot: /var/lib

hostNetworking: false

# 国外镜像下载慢,修改为阿里云镜像(公开镜像,可设置下面地址。)

image: "registry.cn-hangzhou.aliyuncs.com/wang_feng/filebeat-oss"

imageTag: "7.9.0"

imagePullPolicy: "IfNotPresent"

imagePullSecrets: []

livenessProbe:

exec:

command:

- sh

- -c

- |

#!/usr/bin/env bash -e

curl --fail 127.0.0.1:5066

failureThreshold: 3

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 5

readinessProbe:

exec:

command:

- sh

- -c

- |

#!/usr/bin/env bash -e

filebeat test output

failureThreshold: 3

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 5

# Whether this chart should self-manage its service account, role, and associated role binding.

managedServiceAccount: true

# additionals labels

labels: {}

podAnnotations: {}

# iam.amazonaws.com/role: es-cluster

# Various pod security context settings. Bear in mind that many of these have an impact on Filebeat functioning properly.

#

# - User that the container will execute as. Typically necessary to run as root (0) in order to properly collect host container logs.

# - Whether to execute the Filebeat containers as privileged containers. Typically not necessarily unless running within environments such as OpenShift.

podSecurityContext:

runAsUser: 0

privileged: false

resources:

requests:

cpu: "100m"

memory: "100Mi"

limits:

cpu: "1000m"

memory: "200Mi"

# Custom service account override that the pod will use

serviceAccount: ""

# Annotations to add to the ServiceAccount that is created if the serviceAccount value isn't set.

serviceAccountAnnotations: {}

# eks.amazonaws.com/role-arn: arn:aws:iam::111111111111:role/k8s.clustername.namespace.serviceaccount

# A list of secrets and their paths to mount inside the pod

# This is useful for mounting certificates for security other sensitive values

secretMounts: []

# - name: filebeat-certificates

# secretName: filebeat-certificates

# path: /usr/share/filebeat/certs

# How long to wait for Filebeat pods to stop gracefully

terminationGracePeriod: 30

tolerations: []

nodeSelector: {}

affinity: {}

# This is the PriorityClass settings as defined in

# https://kubernetes.io/docs/concepts/configuration/pod-priority-preemption/#priorityclass

priorityClassName: ""

updateStrategy: RollingUpdate

# Override various naming aspects of this chart

# Only edit these if you know what you're doing

nameOverride: ""

fullnameOverride: ""

helm 安装filebeat

helm install -n efk filebeat filebeat/

三 、 部署配置kibana

vim kibana/values.yaml

注意修改中文注释地方

---

#elasticsearch 主机地址。

elasticsearchHosts: "http://elasticsearch-master:9200"

replicas: 1

# Extra environment variables to append to this nodeGroup

# This will be appended to the current 'env:' key. You can use any of the kubernetes env

# syntax here

extraEnvs:

- name: "NODE_OPTIONS"

value: "--max-old-space-size=1800"

# - name: MY_ENVIRONMENT_VAR

# value: the_value_goes_here

# Allows you to load environment variables from kubernetes secret or config map

envFrom: []

# - secretRef:

# name: env-secret

# - configMapRef:

# name: config-map

# A list of secrets and their paths to mount inside the pod

# This is useful for mounting certificates for security and for mounting

# the X-Pack license

secretMounts: []

# - name: kibana-keystore

# secretName: kibana-keystore

# path: /usr/share/kibana/data/kibana.keystore

# subPath: kibana.keystore # optional

# 国外镜像下载慢,修改为阿里云镜像(公开镜像,可设置下面地址。)

image: "registry.cn-hangzhou.aliyuncs.com/wang_feng/kibana-oss"

imageTag: "7.9.0"

imagePullPolicy: "IfNotPresent"

# additionals labels

labels: {}

podAnnotations: {}

# iam.amazonaws.com/role: es-cluster

resources:

requests:

cpu: "1000m"

memory: "2Gi"

limits:

cpu: "1000m"

memory: "2Gi"

protocol: http

serverHost: "0.0.0.0"

healthCheckPath: "/app/kibana"

# Allows you to add any config files in /usr/share/kibana/config/

# such as kibana.yml

kibanaConfig: {}

# kibana.yml: |

# key:

# nestedkey: value

# If Pod Security Policy in use it may be required to specify security context as well as service account

podSecurityContext:

fsGroup: 1000

securityContext:

capabilities:

drop:

- ALL

# readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

serviceAccount: ""

# This is the PriorityClass settings as defined in

# https://kubernetes.io/docs/concepts/configuration/pod-priority-preemption/#priorityclass

priorityClassName: ""

httpPort: 5601

extraContainers: ""

# - name: dummy-init

# image: busybox

# command: ['echo', 'hey']

extraInitContainers: ""

# - name: dummy-init

# image: busybox

# command: ['echo', 'hey']

updateStrategy:

type: "Recreate"

service:

type: ClusterIP

loadBalancerIP: ""

port: 5601

nodePort: ""

labels: {}

annotations: {}

# cloud.google.com/load-balancer-type: "Internal"

# service.beta.kubernetes.io/aws-load-balancer-internal: 0.0.0.0/0

# service.beta.kubernetes.io/azure-load-balancer-internal: "true"

# service.beta.kubernetes.io/openstack-internal-load-balancer: "true"

# service.beta.kubernetes.io/cce-load-balancer-internal-vpc: "true"

loadBalancerSourceRanges: []

# 0.0.0.0/0

#配置ingress 实现域名访问。

ingress:

enabled: true

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/whitelist-source-range: 124.133.53.77/32,64.115.0.0/16,64.105.0.0/16 #配置白名单访问

kubernetes.io/tls-acme: "true"

path: /

hosts:

- kibana.wangfeng.com

tls: []

# - secretName: chart-example-tls

# hosts:

# - chart-example.local

readinessProbe:

failureThreshold: 3

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 3

timeoutSeconds: 5

imagePullSecrets: []

nodeSelector: {}

tolerations: []

affinity: {}

nameOverride: ""

fullnameOverride: ""

lifecycle: {}

# preStop:

# exec:

# command: ["/bin/sh", "-c", "echo Hello from the postStart handler > /usr/share/message"]

# postStart:

# exec:

# command: ["/bin/sh", "-c", "echo Hello from the postStart handler > /usr/share/message"]

# Deprecated - use only with versions < 6.6

elasticsearchURL: "" # "http://elasticsearch-master:9200"

helm 安装kibana

helm install -n efk kibana kibana/

四、检查

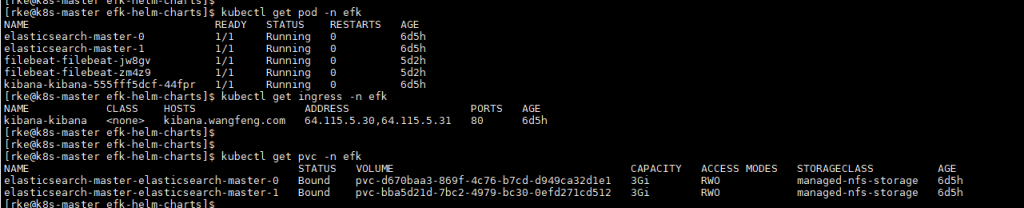

kubectl get pod -n efk

kubectl get ingress -n efk

kubectl get pvc -n efk

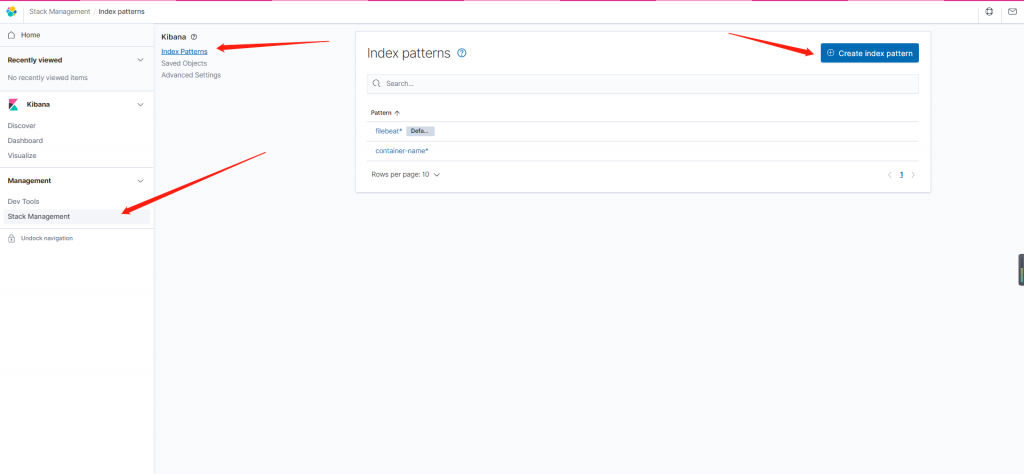

五、登录kibana 配置索引查看日志。

创建索引

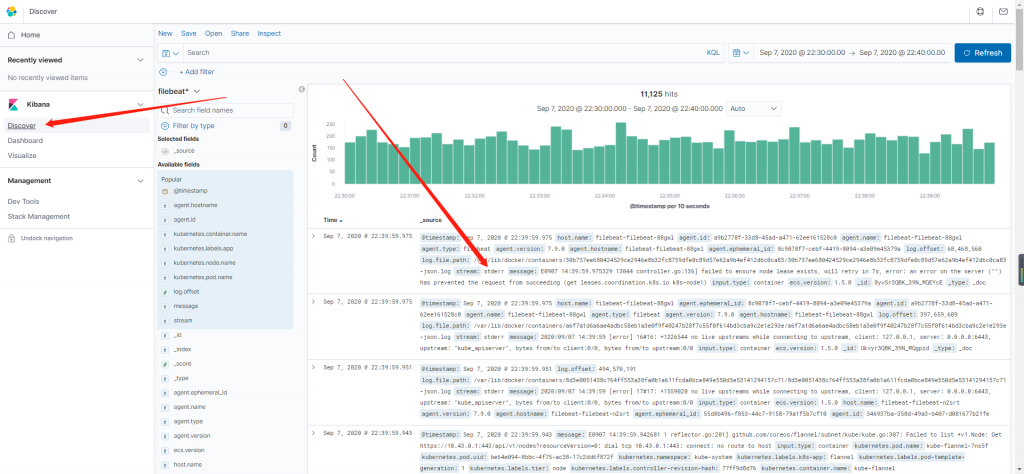

查看日志

GitHub地址:https://github.com/elastic/helm-charts